Pre and post patching actions - Hooks

General description

If you need to execute some actions before or/and after update you can use Pre&Post Actions AutoPatcher feature. It allows you to specify list of AWS Lambda ARNs or Azure Functions which should be executed before or/and after update.

Pre&Post Actions Auto-Patcher feature allows to:

- start/stop instances

- tag instances

- create snapshots

- many others, just write your lambda or Azure functions

Hook types

In AutoPatcher those actions are called hooks and can be divided into groups in the following way:

- Plan-global hooks, a.k.a. event-global hooks, standard hooks or simply hooks (as opposite to host-hooks described below): hooks that are defined per plan

- Pre hooks: executed before any machine starts patching

- Post hooks which are executed:

- after all machines finished patching

- only if there was no failed pre hook

- regardless of the machines patching statuses

- Machine-specific (a.k.a. host hooks): hooks that are defined per machine in a plan

- Pre host hooks: executed before the machine starts patching

- Post host hooks which are executed:

- after the machine finished patching

- only if there was no failed pre host hooks for this machine

- regardless of the machine patching status

More detailed description of each hook type and how to configure them is below.

Plan-global hooks

Pre/post plan-global hook can be of any of the following types:

- AWS Lambda ARN

- HTTPS endpoint of an Azure Function

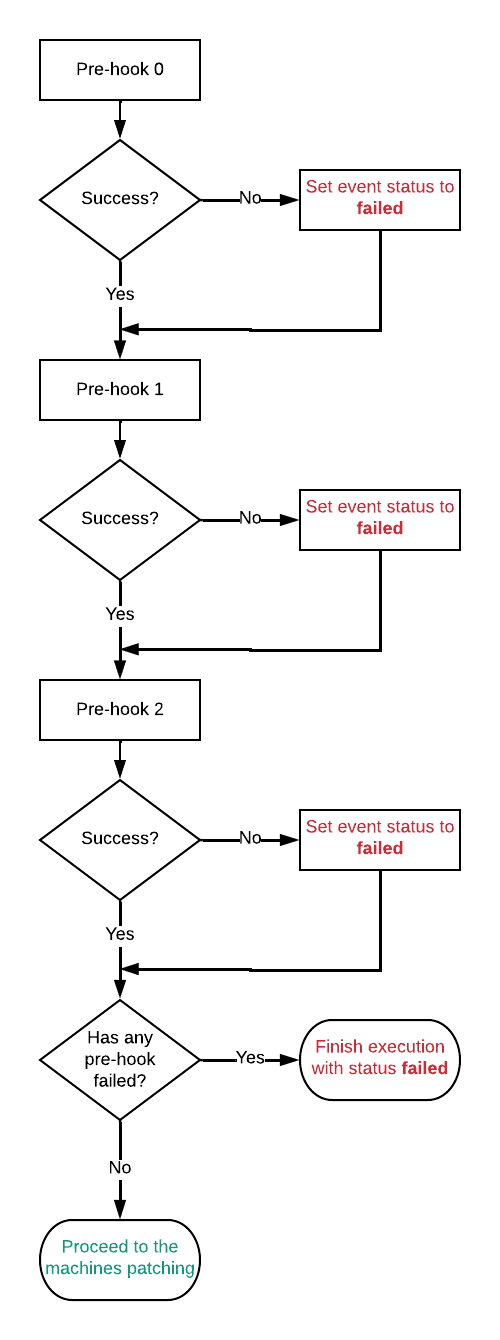

Plan-global hooks execution flow

Below the flow of the pre hooks is shown.

Hook fail policy

The following policies apply:

- If a pre hook fails, AutoPatcher won't patch any machine proces and finish execution with status failed. A notification about an error will be sent. However all hooks after the failed one will be executed anyway.

- Due to the fact machine update won't be started if pre hook failed you can use it to do actions like starting machines.

- If a post hook fails, AutoPatcher will finish execution with status failed and send notification about it.

- You can use post hooks to stop machines or check if customer application is running after the update.

How to configure hooks

Creating hooks using AWS Lambda

This section describe how to create and configure pre and post hooks in patching process using AWS Lambda.

First you need to create AWS Lambda function.

You should allow your lambda to be invoked by the AutoPatcher production account. To do it you need to set Lambda permissions policy. It's not possible to do it using AWS console but you can do it using the AWS CLI like this:

aws lambda add-permission --function-name your_lambda_name --statement-id StatementName --action 'lambda:InvokeFunction' --principal 'XXXXXXXXXXXX'

where XXXXXXXXXXXX is the AWS Account ID where AutoPatcher is deployed (currently it's 286863837419).

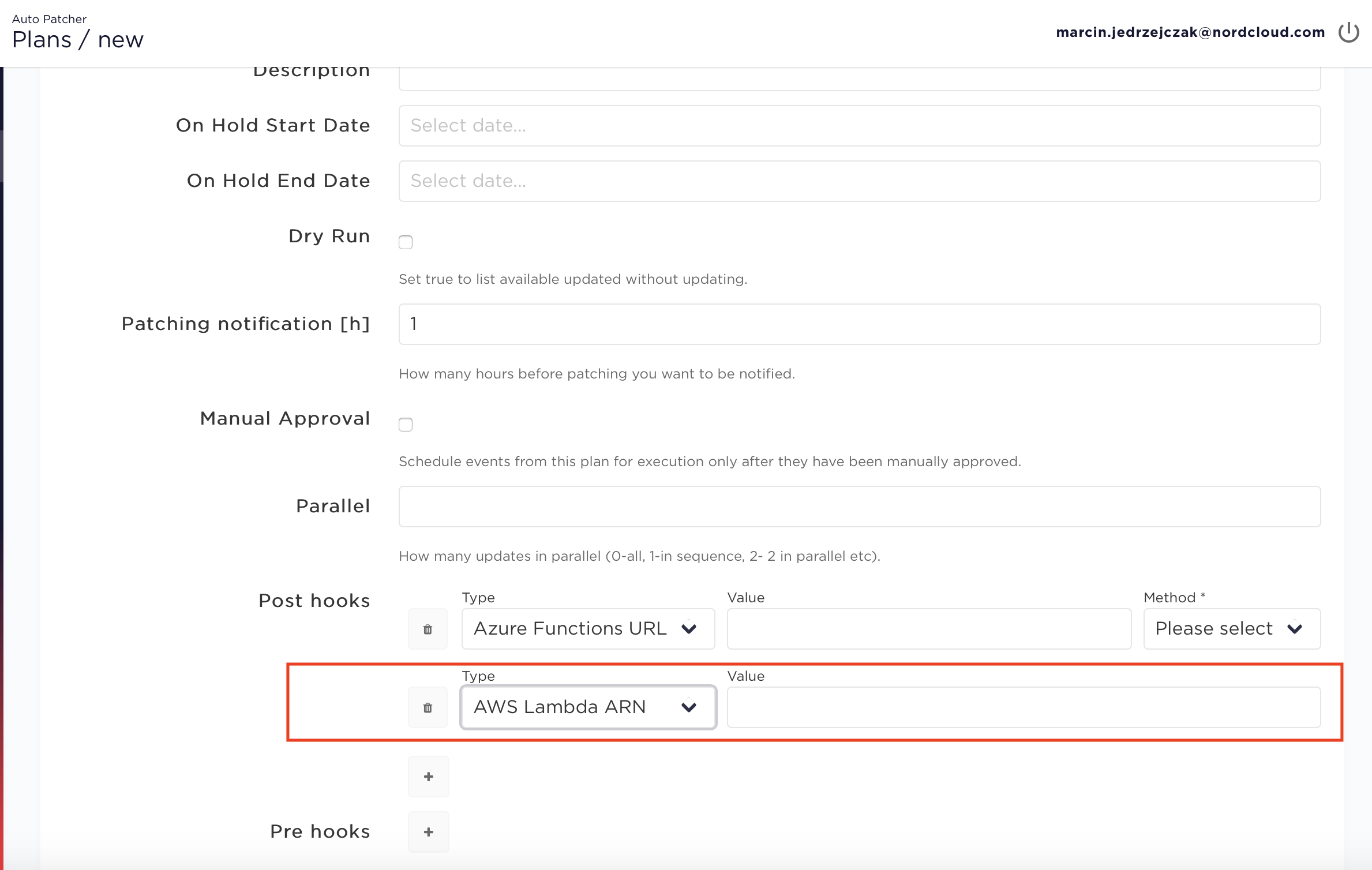

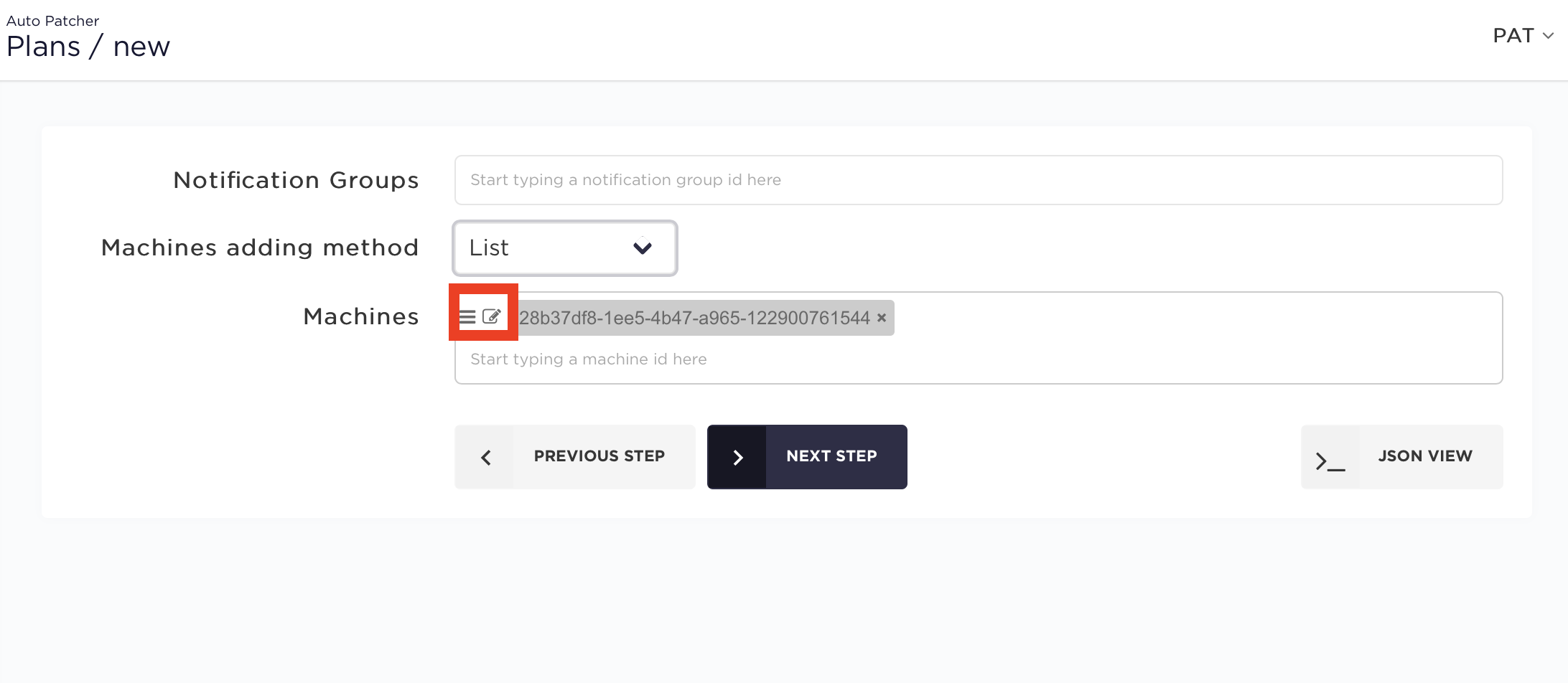

Adding Lambda ARN to schedule plan in the UI

Creating hooks using Azure Functions

Using Azure Functions is very similar to AWS Lambdas. To configure Azure pre/post hooks you need:

- Create Azure function triggered by the

GEThttp request. If you want to trigger function using POST method you have to addmethod: "POST"field - Get function URL with security code (e.g. https://functions3a416041.azurewebsites.net/api/HttpTriggerJS1?code=D6eLJ5XkahSab/qQYeipxL54MwhMKoDPKdAuABitVaYTI3LYjthgtQ==)

- Add this link into pre hooks or post hooks list instead of lambda arn. Please remember to specify type of the hook (azure in here).

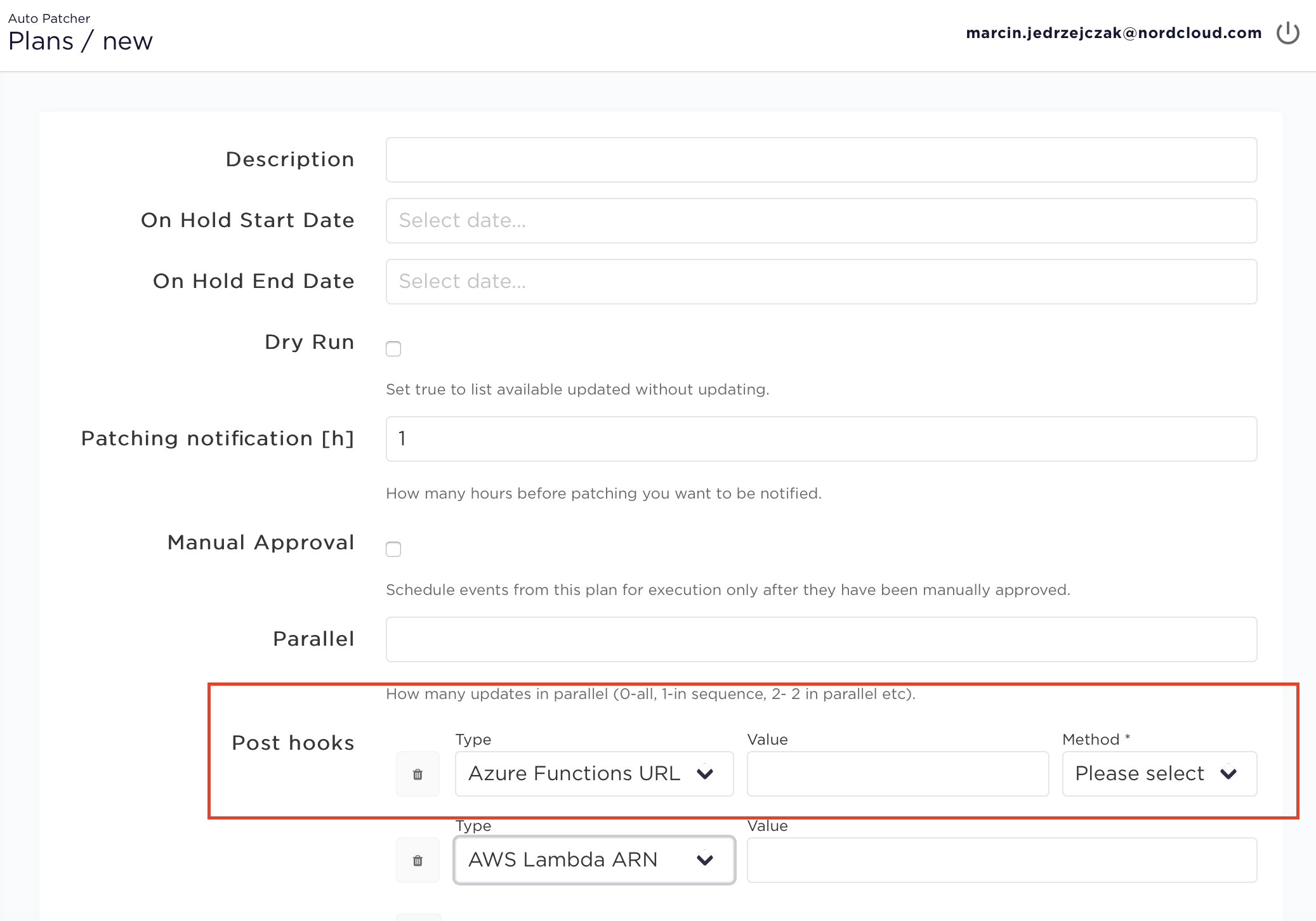

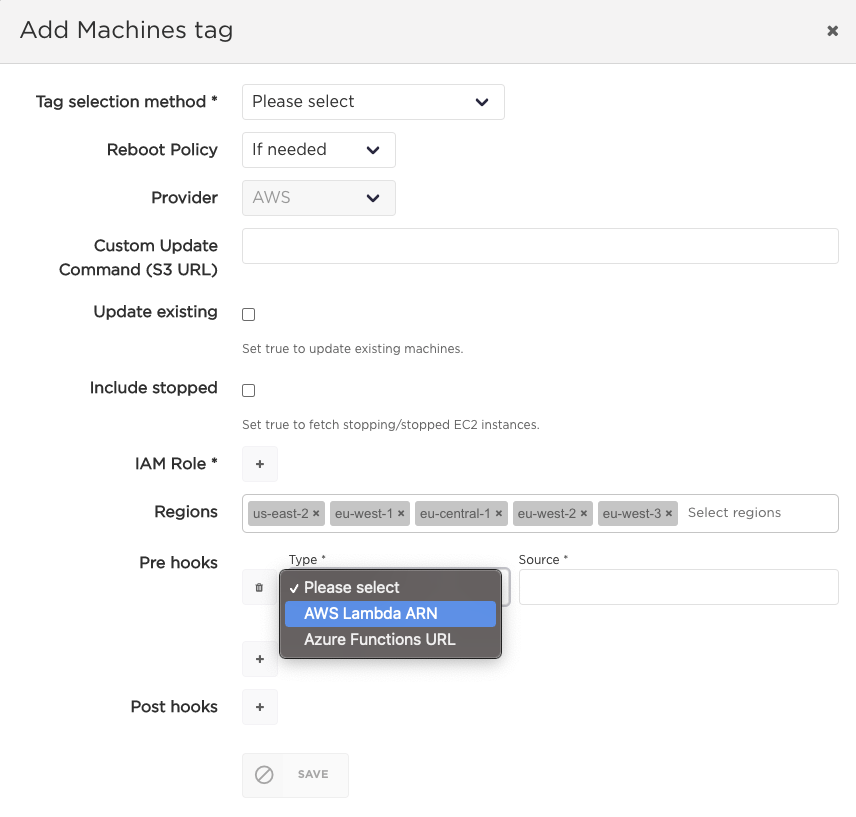

Specifying Azure hook in user interface in plan creating/editing

Host hooks (machine-specific)

These hooks are applied for every machine separately but creating and configuring them is similar to standard hooks. The main difference is that in addition to AWS Lambdas and Azure Functions the user can use custom scripts as a source for a host hook.

Uploading the script files

The script files (shell scripts for Linux machines and PowerShell scripts for Windows) need to be uploaded to an S3 bucket and made readable by the AutoPatcher AWS account. This can be achieved in two ways:

- Make the S3 object publicly readable (which may not be acceptable to the customer for security reasons)

aws s3api put-object-acl --bucket <YOUR_BUCKET> --key <SCRIPT_PATH> --acl public-read

- Give the read permissions explicitly to the AutoPatcher production AWS account only. For this the user would need a canonical user ID of the AutoPatcher AWS account which is provided in the script below

aws s3api put-object-acl --bucket <YOUR_BUCKET> --key <SCRIPT_PATH> --grant-read id=708e30907d5e6a5baeb54773a593ca5012c5d08cfc011376f6e960922aad3faf

To specify host hook you need to attach it to a machine in a plan by giving it's type and source link.

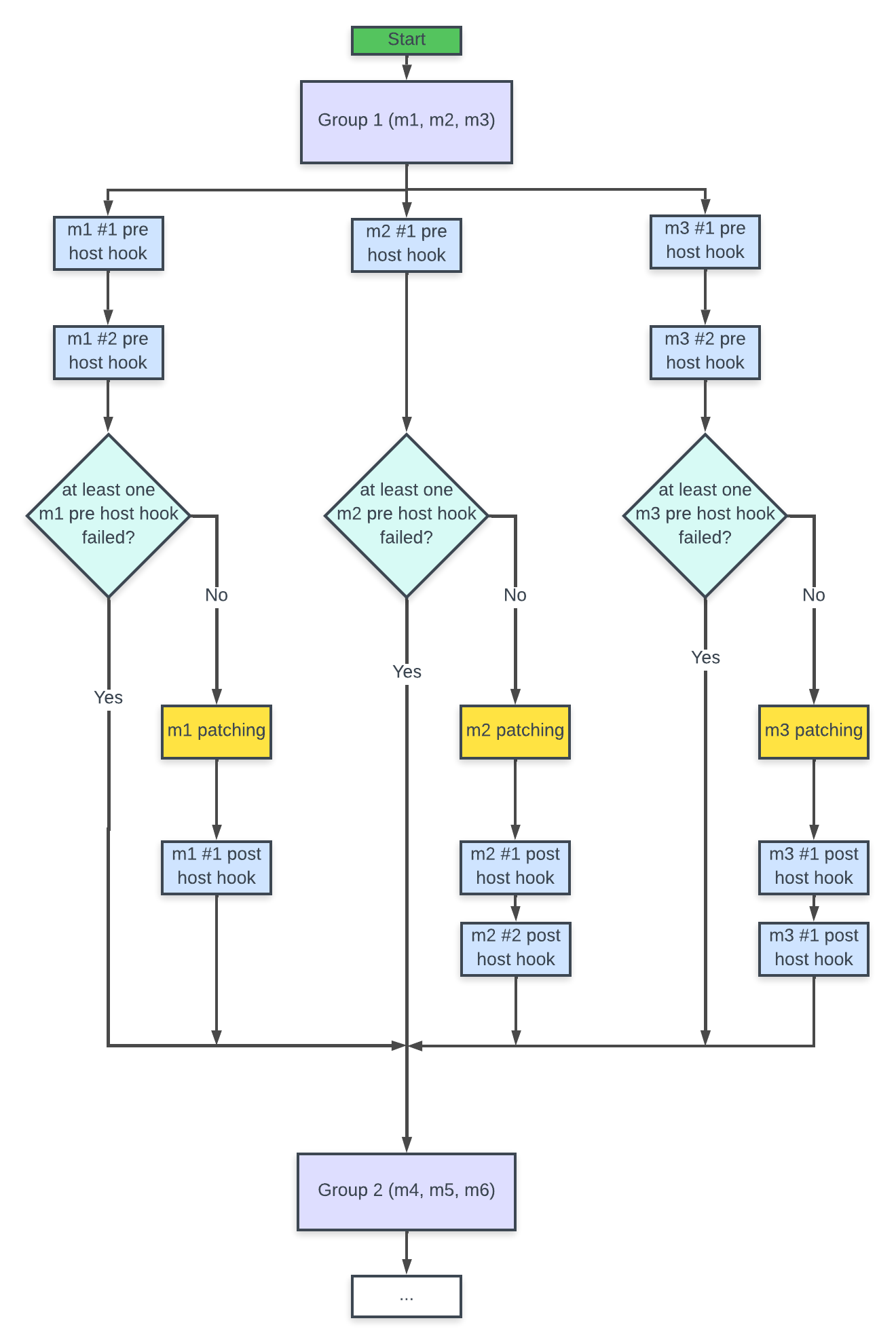

Host hooks execution flow

Adding host hook in a static plan

Adding host hook in a dynamic plan

Host hooks fail policy

The following policies apply:

- If pre host hook fails, AutoPatcher will let other machines, which are already updating, finish (if update is in parallel mode) and won't continue patching process for the rest of machines, and will finish execution with failed status. A notification about an error will be sent.

- You can use it to check service status (like Docker, DB server, etc.) on some machines.

- If post host hook fails, AutoPatcher won't continue patching process for the rest of machines, and will finish execution with failed status. A notification about an error will be sent.

- You can use it to check if service is still working after update on some machines.

Hooks input/output payload

Input format

AutoPatcher provides a JSON payload as an input when it executes a hook. Its format is described below:

{"event_id": string,"customer_id": string,"machines": [{"id": string,"status": string,"name": string,"subscription_id": string,"subscription_name": string,"resource_group_name": string},...],"hook_outputs": [{"code": int,"message": string,"hook": {"type": string,"source": string,"method": string,"name": string},"plan_name": string,"event_id": string,"output": string,"machine_id": string,"command_id": string},...]}

Fields description

machines- contains a list of machines from the current patching event .- If the hook is executed is a host hook then this list will contain only the machine this hook is defined for.

- If the hook is executed as a part of event's partial execution (read more).

hook_outputs- contains a list of all hooks that were executed prior to the current hook execution along with their outputs. More information is provided in the Hook outputs propagation section.plan_nameandevent_idare only present for a patching event from a pipeline (read more)command_idcontains an ID of the SSM command and is present only for host hooks of typescripthookcontains the definition of the hook. Thenamefield in it can bepre_0,post_1,pre_host_1and so on. Note that hooks are counted from 0.

Output format

The return value of the hook function should be the following JSON object:

{"code": number"msg": string"output": string}

Fields description

code- a number indicating hook's execution status. If it's in 200-299 range the hook is considered successful. If it's greater than 299 it's considered failed.msg- a text message that can be shown in the GUI.output- an arbitrary string that will be can be used to pass some data to other hooks. More is described in the next section.

Hook outputs propagation

After a hook is executed the fields code, msg and output from its output are stored and propagated to other hooks

through the hook_outputs field in the following manner:

code->hook_outputs[].codemsg->hook_outputs[].messageoutput->hook_outputs[].output

This feature allows a hook to get access to the data some other hook had generated (this includes not only the hooks from the same event as the current hook, but from all the previous events in the same pipeline execution). For example, a pre hook can check all the machines in the plan and start those that are stopped and then return the list of their IDs as output. Then a post hook can retrieve this output and stop only those machines that were started in the pre hook.

Starting and stoping Azure VM in hooks

Create Azure Active Directory App registration

To create app registration go to Azure Active Directory → App registrations → New registration and provide meaningful name (rest can stay default).

After creation go to created app registration and navigate to Certificates and Secret Keys and generate new secret key, it will be needed later.

Assign role to all VMs

To assign role go to Virtual Machines and for each machine (that needs to be started and stopped) go to:

IAM → Add role assignment, select Virtual Machine Contributor role and in Select search for your App registration name.

Create functions

This scripts will only work for machines that are added in AutoPatcher with their Azure Subscription Id and Resource Group Name. Rest of the machines will be skipped.

Both scripts require ms-rest-azure and azure-arm-compute packages which can be installed via npm.

Create functions in Node.js environment, I will not describe all steps here, because there are plenty of ways to do it (I've used azure cli and vs code azure functions plugin). Verification method should be anonymous and function should support HTTP POST method.

Code for starting machines (with waiting for boot):

const msRestAzure = require('ms-rest-azure');const ComputeManagementClient = require('azure-arm-compute');module.exports = async function (context, req) {try {let machines = req.body.machines;let creds = await msRestAzure.loginWithServicePrincipalSecret(process.env.AZURE_CLIENT_ID, process.env.AZURE_APPLICATION_SECRET, process.env.AZURE_TENANT);let waitObjects = [];for (let machine of machines) {if (!machine.subscription_id || !machine.name || !machine.resource_group_name) {continue;}try {let computeClient = new ComputeManagementClient(creds, machine.subscription_id);waitObjects.push(computeClient.virtualMachines.start(machine.resource_group_name, machine.name.split('.')[0]));} catch (error) {context.log(`Error while starting ${machine.name} - ${error.message}`)}}await Promise.all(waitObjects);context.res = httpRes(200, "Instances started");} catch (error) {context.res = httpRes(500, error.message)}};httpRes = (code, msg) => {return {status: code,body: {"code": code,"msg": msg,}}}

Code for stopping machines (without waiting). Note that there two ways of stopping vm - with (still billed) and without releasing resources:

const msRestAzure = require('ms-rest-azure');const ComputeManagementClient = require('azure-arm-compute');module.exports = async function (context, req) {try {let machines = req.body.machines;let creds = await msRestAzure.loginWithServicePrincipalSecret(process.env.AZURE_CLIENT_ID, process.env.AZURE_APPLICATION_SECRET, process.env.AZURE_TENANT);for (let machine of machines) {if (!machine.subscription_id || !machine.name || !machine.resource_group_name) {continue;}try {let computeClient = new ComputeManagementClient(creds, machine.subscription_id);// power off and do not release resources (billed):// computeClient.virtualMachines.powerOff(machine.resource_group_name, machine.name.split('.')[0]);// power off and release resources (not billed):computeClient.virtualMachines.deallocate(machine.resource_group_name, machine.name.split('.')[0]);} catch (error) {context.log(`Error while stopping ${machine.name} - ${error.message}`)}}context.res = httpRes(200, "Instances stopping initiated");} catch (error) {context.res = httpRes(500, error.message)}};httpRes = (code, msg) => {return {status: code,body: {"code": code,"msg": msg,}}}

Add env variables for both functions

To add environmental variables for Azure Function go to their application settings.

Both functions require 3 variables:

AZURE_CLIENT_ID- ID of an app registration created in the first step.AZURE_APPLICATION_SECRET- Secret key generated in the first step.AZURE_TENANT- Azure Active Directory ID (in Properties).

Pass functions as hooks

The last step is to add both functions as hooks (also host hooks) to AutoPatcher. The starting script probably should be the first one in pre hooks section and the stopping script should probably be the last one in post hooks. HTTP method should not matter.